WHAT IS MODEL PERFORMANCE MONITORING?

How ML models stay relevant and work as intended.

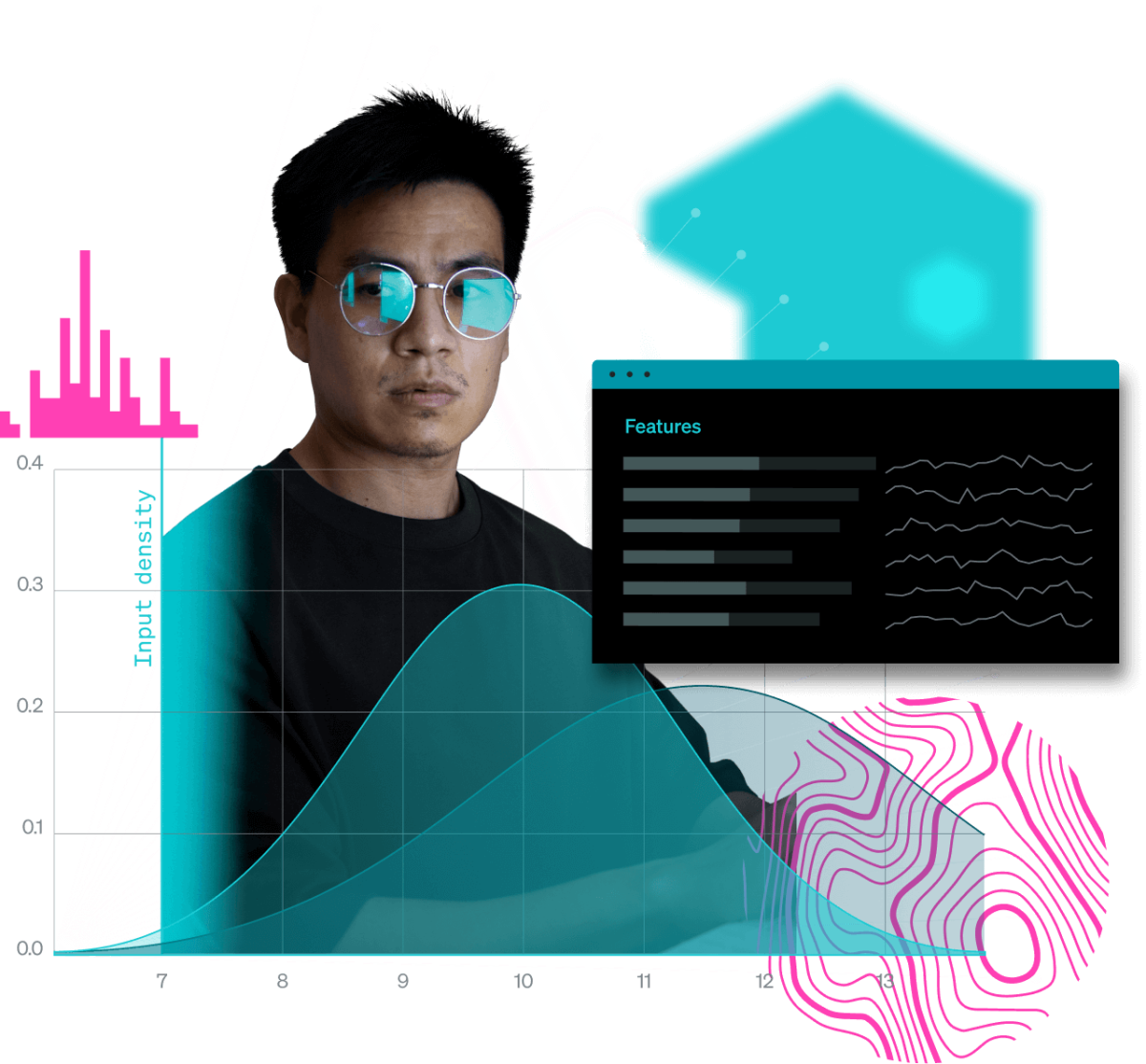

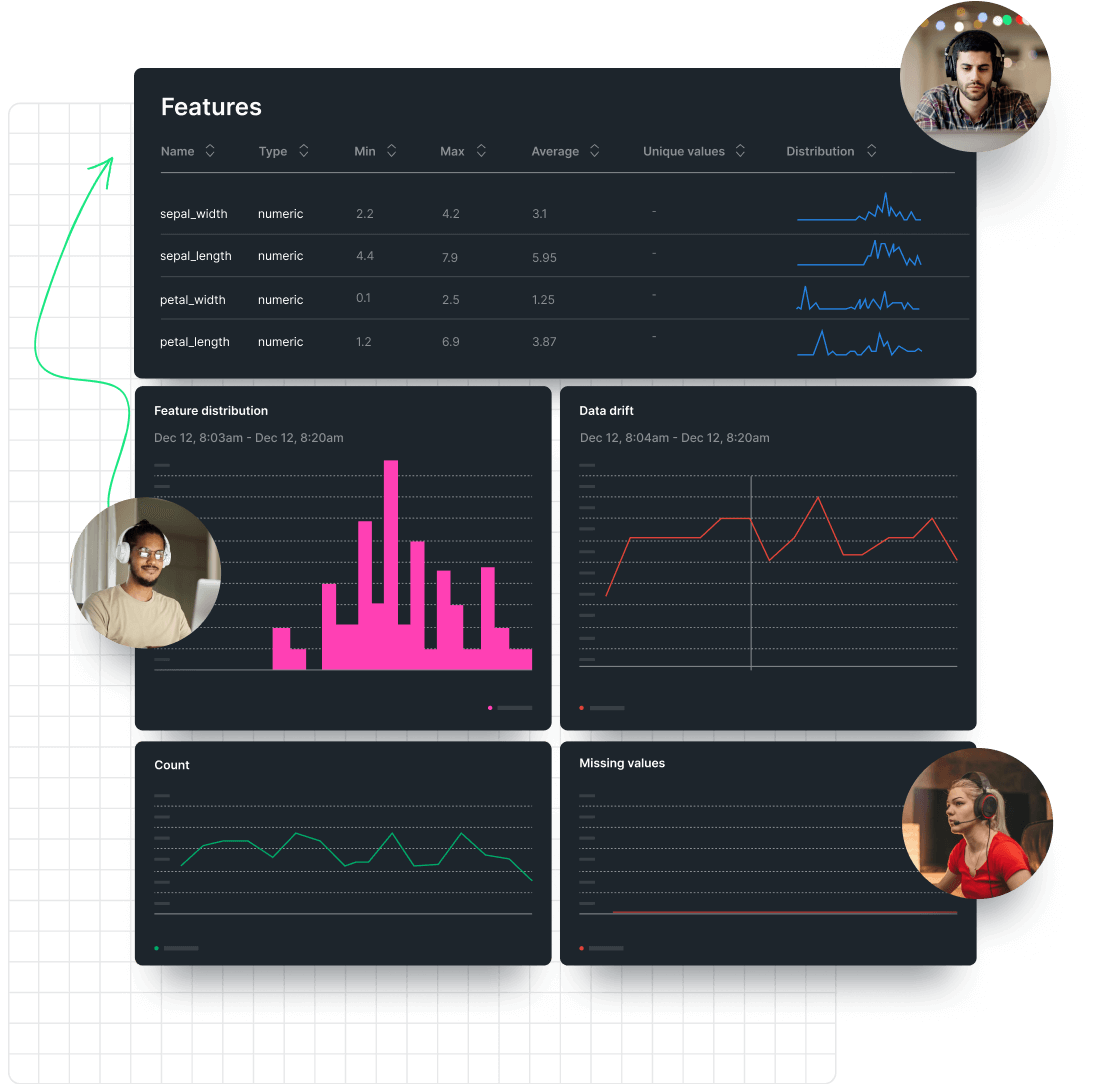

ACCURATE ML MODELS

Tackle model-performance degradation after deployment.

- Resolve data drift and concept drift to maintain relevant predictions.

- Configure alerts from New Relic alerts and applied intelligence to resolve problems and reduce noise.

- View statistical data of model performance and model features to improve results.

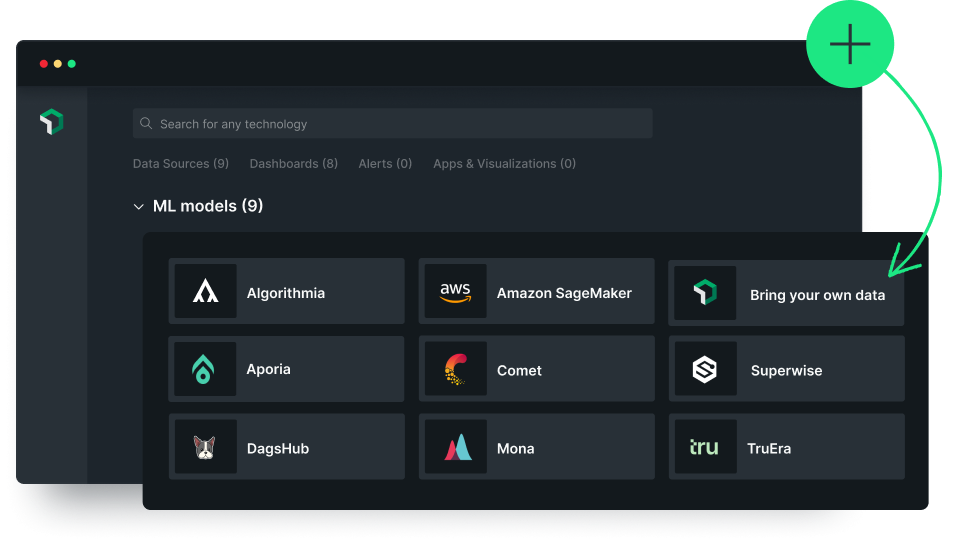

READYMADE INTEGRATIONS

Bring ML telemetry. Reach informed decisions.

- Bring your own data (features, prediction values, etc.) as inference data, aggregated statistics, or custom metrics.

- Use integrations (for example, AWS SageMaker) to add ML model telemetry from other platforms.

- Leverage our diverse community of partners for open-platform data ingestion.

END-TO-END OBSERVABILITY

Visualize models in moments. See their value rise.

- Find and add charts that are tailored for specific use cases.

- Get instant visibility into your models with out-of-the-box performance-monitoring dashboards.

- Easily track model predictions and drift over time for insights at a glance.

ALIGNMENT ACROSS TEAMS

Solve collaboration around ML with data.

- Share one source of data to shrink inefficient gaps between ML engineer, DevOps, and data-science teams.

- See performance in context on the only full-stack observability platform that tracks ML models.

- Collaborate in a production environment and respond to alerts before your business is impacted.